I was pretty excited when Let's Encrypt began their public beta on December 3, 2015. I spent some time looking for the best client and finally got my first certificate issued on January 11, 2016 using acme-nosudo, also known as letsencrypt-nosudo back then. It was a nice solution as the source was short and sweet, and it didn't require access to the certificate private key and even the account private key was only touched by human-readable OpenSSL command line invocations.

As soon as Let's Encrypt became popular, people started demanding wildcard certificates, and as it turned out, this marked the next milestone in my ACME client stack. On March 13, 2018, wildcard support went live, and I started doing my research again to find the perfect stack.

Although ACME (and thus Let's Encrypt) support many different methods of

validation, wildcard certificates could only be validated using dns-02.

This involves the ACME API giving the user a challenge, which must be

later returned in the TXT record of _acme-challenge.domain.tld thus

requires frequent access to DNS records. Most solutions solve the problem

by invoking APIs to the biggest DNS provider, however, I don't use any of

those and have no plan on doing so.

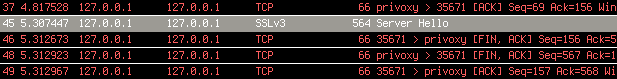

Fortunately, one day I bumped into acme-dns, which had an elegant

solution to this problem. Just like http-01 and http-02 validators follow

HTTP redirects, dns-01 and dns-02 behave in a similar way regarding CNAME

records. By running a tiny specialized DNS server with a simple API, and

pointing a CNAME record to a name that belongs to it, I could have my cake and

eat it too. I only had to create the CNAME record once per domain and that's it.

The next step was finding a suitable ACME client with support for dns-02 and

wildcard certificates. While there are lots of ACMEv1 clients, adoption of

ACMEv2 is a bit slow, which limited my options. Also, since a whole new DNS API

had to be supported, I preferred to find a project in a programming language I

was comfortable contributing in.

This led me to sewer, written in Python, with full support for

dns-02 and wildcard certificates, and infrastructure for DNS providers

plugins. Thus writing the code was pretty painless, and I submitted

a pull request on March 20, 2018. Since requests was already

a dependency of the project, invoking the acme-dns HTTP API was painless, and

implementing the interface was pretty straightforward. The main problem was

finding the acme-dns subdomain since that's required by the HTTP API, while

there's no functionality in the Python standard library to query a TXT record.

I solved that using dnspython, however, that involved adding a new

dependency to the project just for this small task.

I tested the result in the staging environment, which is something I'd recommend for anyone playing with Let's Encrypt to avoid running into request quotas. Interestingly, both the staging and production Let's Encrypt endpoints failed for the first attempt but worked for subsequent requests (even lots of them), so I haven't debugged this part so far. I got my first certificate issued on April 28, 2018 using this new stack, and used the following script:

from sys import argv

import sewer

dns_class = sewer.AcmeDnsDns(

ACME_DNS_API_USER='xxxxxxxx-xxxx-xxxx-xxxx-xxxxxxxxxxxx',

ACME_DNS_API_KEY='yyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyy',

ACME_DNS_API_BASE_URL='http://127.0.0.1:zzzz',

)

with open('/path/to/account.key', 'r') as f:

account_key = f.read()

with open('/path/to/certificate.key', 'r') as f:

certificate_key = f.read()

client = sewer.Client(domain_name='*.'+argv[1],

domain_alt_names=[argv[1]],

dns_class=dns_class,

account_key=account_key,

certificate_key=certificate_key,

# ACME_DIRECTORY_URL='https://acme-staging-v02...',

LOG_LEVEL='DEBUG',

)

certificate = client.cert()

with open(argv[1] + '.crt', 'w') as certificate_file:

certificate_file.write(certificate)

By pointing all the CNAME records to the same acme-dns subdomain, I could

hardcode that, and even though there's an API key, I also set acme-dns to

listen on localhost only to limit exposure. By specifying the ACME_DIRECTORY_URL

optional argument in the sewer.Client constructor, the script can easily be

used on the staging Let's Encrypt infrastructure instead of the production one.

Also, at the time of this writing, certificate_key is not yet in mainline sewer,

so if you'd like to try it before it's merged, take a look at my pull request

regarding this.