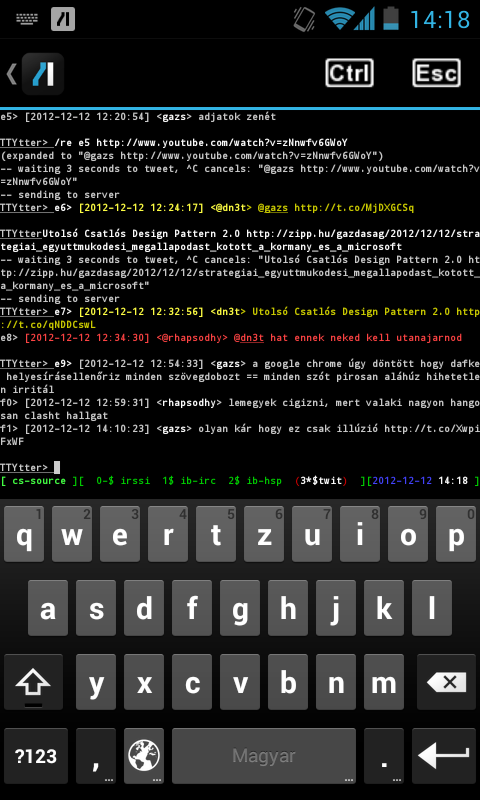

As I've mentioned in February 2013, I started using mutt in December 2012

and as a transitional state, I've been using my three IMAP accounts in on-line

mode, like I did with KMail. All outgoing mail got recorded in an mbox

file called ~/Mail/Sent for all three accounts, which was not intentional,

but a configuration glitch at first. But now I realized that it has two

positive side effects when I'm using cellular Internet connection. Since this

way, the MUA doesn't upload the message using IMAP to the Sent folder,

resulting in 50% less data sent, which makes sending mail faster and saves

precious megabytes in my mobile data plan.

However, I still prefer having my sent mail present in the Sent folder of my

IMAP accounts, so I needed a solution to transfer the contents of an mbox file

to IMAP folders based on the From field. I preferred Python for the task as

the standard library had support for both IMAP and mbox out of the

box, and I've already had good experience with the former. Many solutions

I found used Python as well, but none of them had support for multiple IMAP

accounts and many used deprecated classes, or treated the process as a one-shot

operation, while I planned to use this to upload my mbox regularly to IMAP.

So I decided to write a simple script, which I completed in about an hour or

two that did exactly what I need, and still had no dependencies to anything

that's not part of the standard library. The script has support for invocation

from other modules and the command line as well, core functionality was

implemented in the process_mbox method of the OutboxSyncer class.

The method gets the Mailbox object and a reference for a database as

parameters, latter is used to ensure that all messages are uploaded exactly

once, even in case of exceptions or parallel invocations.

for key, msg in mbox.iteritems():

account, date_time = msg.get_from().split(' ', 1)

contents = mbox.get_string(key)

msg_hash = HASH_ALGO(contents).hexdigest()

params = (msg_hash, account)

The built-in iterator of the mailbox is used to iterate through messages in a

memory-efficient way. Both key and msg are needed as former is needed to

obtain the raw message as a byte string (contents), while latter makes

parsed data, such as the sender (account) and the timestamp (date_time)

accessible. The contents of the message is hashed (currently using SHA-256)

to get a unique identifier for database storage. In the last line, params is

instantiated for later usage in parameterized database queries.

with db:

cur.execute(

'SELECT COUNT(*) FROM messages WHERE hash = ? AND account = ?',

params)

((count,),) = cur.fetchall()

if count == 0:

cur.execute('INSERT INTO messages (hash, account) VALUES (?, ?)',

params)

else:

continue

By using the context manager of the database object, checking whether the

message free for processing and locking it is done in a single transaction,

resulting in a ROLLBACK in case an exception gets thrown and in a COMMIT

otherwise. Assigning the variable count was done this way to assert that

the result has a single row with a single column. If the message is locked

or has already been uploaded, the mailbox iterator is advanced without

further processing using continue.

try:

acc_cfg = accounts[account]

imap = self.get_imap_connection(account, acc_cfg)

response, _ = imap.append(acc_cfg['folder'], r'\Seen',

parsedate(date_time), contents)

assert response == 'OK'

After the message is locked for processing, it gets uploaded to the IMAP

account into the folder specified in the configuration. The class has

a get_imap_connection method that calls the appropriate imaplib

constructors and takes care of connection pooling to avoid connection and

disconnection for every message processed. The return value of the IMAP

server is checked to avoid silent fail.

except:

with db:

cur.execute('DELETE FROM messages WHERE hash = ? AND account = ?',

params)

raise

else:

print('Appended', msg_hash, 'to', account)

with db:

cur.execute(

'UPDATE messages SET success = 1 WHERE hash = ? AND account = ?',

params)

In case of errors, the message lock gets released and the exception is

re-raised to stop the process. Otherwise, the success flag is set to 1,

and processing continues with the next message. Source code is available in

my GitHub repository under MIT license, feel free to fork and send pull

requests or comment on the code there.