In November 2012, I started doing Arduino workshops in H.A.C.K.

and after I announced it on some local channels, some people asked if it

could be recorded and published. At first, it seemed that recording

video would require the least effort to publish what we've done, and while

I though otherwise after the first one, now we have a collection of simple

and robust tools and snippets that glue them together that can be reused

for all future workshops.

The recording part was simple, I won't write about it outside this paragraph,

but although the following thoughts might seem trivial, important things

cannot be repeated enough times. Although the built-in microphones are great

for lots of purposes, unless you're sitting in a silent room (no noise from

machines nor people) or you already use a microphone with an amplifier,

a microphone closer to the speaker should be used. We already got a cheap

lavalier microphone with a preamplifier and 5 meters of cable, so we used

that. It also helps if the camera operator has a headphone, so the volume

level can be checked, and you won't freak out after importing the video to

the PC that either the level is so low that it has lots of noise or it's so

high that it becomes distorted.

I used a DV camera, so running dvgrab resulted in dvgrab-*.dv files.

Although the automatic splitting is sometimes desireable (not just because of

crippled file systems, but it makes it possible to transfer each file after

it's closed, lowering the amount of drive space needed), if not, it can be

disabled by setting the split size to zero using -size 0. It's also handy

to enable automatic splitting upon new recordings with -autosplit. Finally,

-timestamp gives meaningful names to the files by using the metadata

recorded on the tape, including the exact date and time.

The camera I used had a stereo microphone and a matching stereo connector, but

the microphone was mono, with a connector that shorted the right channel and

the ground of the input jack, the audio track had a left channel carrying the

sound, and a right one with total silence. My problem was that most software

handled channel reduction by calculating an average, so the amplitude of the

resulting mono track was half of the original. Fortunately, I found that

ffmpeg is capable of powerful audio panning, so the following

parameter takes a stereo audio track, discards the right channel, and uses

the left audio channel as a mono output.

-filter_complex "pan=1:c0=c0"

I also wanted to have a little watermark in the corner to inform viewers about

the web page of our hackerspace, so I created an image with matching resolution

in GIMP, wrote the domain name in the corner, and made it semi-transparent.

I used this method with Mencoder too, but FFmpeg can handle PNGs with 8-bit

alpha channels out-of-the-box. The following, combined command line adds the

watermark, fixes the audio track, and encodes the output using H.264

into an MKV container.

$ ffmpeg -i input.dv -i watermark.png -filter_complex "pan=1:c0=c0" \

-filter_complex 'overlay' -vcodec libx264 output.mkv

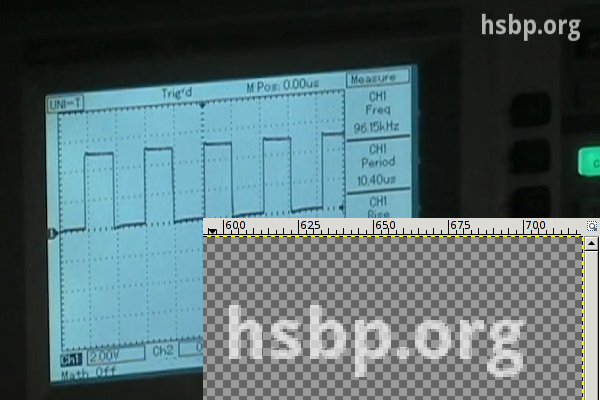

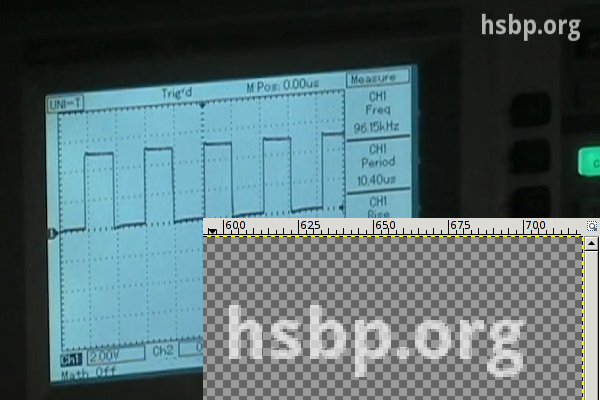

A cropped sample frame of the output can be seen below, with the zoomed

watermark opened in GIMP in the corner.

I chose MKV (Matroska) because of the great tools provided by

MKVToolNix (packaged under the same name in lowercase for Debian and

Ubuntu) that make it possible to merge files later in a safe way. Merging

was needed in my case for two reasons.

- If I had to work with split

.dv files, I converted them to .mkv files

one by one, and merged them in the end.

- I wanted to add a title to the beginning, which also required a merge with

the recorded material.

I tried putting the title screen together from scratch, but I found it much

easier to take the first 8 seconds of an already done MKV using mkvmerge,

then placed a fully opaque title image of matching size using the overlay I

wrote about above, and finally replace the sound with silence. In terms of

shell commands, it's like the following.

ffmpeg -i input.mkv -i title.png -filter_complex 'overlay' -an \

-vcodec libx264 title-img.mkv

mkvmerge -o title-img-8s.mkv --split timecodes:00:00:08 title-img.mkv

rm -f title-img-8s-002.mkv

ffmpeg -i title-img-8s-001.mkv -f lavfi -i "aevalsrc=0::s=48000" \

-shortest -c:v copy title.mkv

rm -f title-img-8s-001.mkv

The first FFmpeg invocation disables audio using the -an switch, and produces

title-img.mkv that contains our PNG image in the video track, and has no

audio. Then mkvmerge splits it into two files, an 8 seconds long

title-img-8s-001.mkv, and the rest as title-img-8s-002.mkv. Latter gets

discarded right away, and a second FFmpeg invocation adds an audio track

containing nothing but silence with a frequency (48 kHz) matching that of the

recording. The -c:v copy parameter ensures that no video recompression is

done, and -shortest discourages FFmpeg from trying to read as long as at

least one input has data, since aevalsrc would generate silence forever.

Finally, the title(s) and recording(s) can be joined together by using

mkvmerge for the purpose its name suggest at last. If you're lucky, the

command line is as simple as the following:

$ mkvmerge -o workshop.mkv title.mkv + rec1.mkv + rec2.mkv

If you produced your input files using the methods described above, if it

displays an error message, it's almost certainly the following (since all

resolution/codec/etc. parameters should match, right?).

No append mapping was given for the file no. 1 ('rec1.mkv'). A default

mapping of 1:0:0:0,1:1:0:1 will be used instead. Please keep that in mind

if mkvmerge aborts with an error message regarding invalid '--append-to'

options.

Error: The track number 0 from the file 'dvgrab-001.mkv' cannot be

appended to the track number 0 from the file 'title.mkv'. The formats do

not match.

As the error message suggests, the order of the tracks can differ between MKV

files, so an explicit mapping must be provided to mkvmerge for matching the

tracks befor concatenation. The mapping for the most common case (a single audio

and a single video track) is the following.

$ mkvmerge -o workshop.mkv t.mkv --append-to '1:0:0:1,1:1:0:0' + r1.mkv

I've had a pretty good experience with H.264-encoded MKV files, the size stayed

reasonable, and most players had no problem with it. It also supports subtitles,

and since YouTube and other video sharing sites accept it as well, with these

tips, I hope it gets used more in recording and publishing workshops.